The Central Bank of United Arab Emirates (‘CBUAE’) has published two documents that cover the model risk management principles. The first is the Model Management Standards (‘MMS’), which covers the model lifecycle framework that applies to models. This was covered in our Blog 1 (October 2022). The second one is the Model Management Guidance (‘MMG’), which would be covered in this blog.

The main bridge between the MMS and MMG is that the models described in MMG all need to follow the principles set out in MMS. The MMG covers six model types and provides guidance on how to develop and validate these models. Even models not covered in MMG are subject to principles of the MMS (especially data quality, developmental rigour and the ability to justify modelling assumptions).

The key themes that carry across from MMS to MMG are: the need and requirement for quality data that will be used to build models, explainability of the modelling approach used, independent validation and rigorous governance at each stage of the lifecycle. Whereas MMG is relatively prescriptive (whilst MMS is generally principles based) when describing the specific model examples, it does allow institutions to deviate from the guidance if the methodologies utilised can be appropriately justified.

The main links or common themes between MMS and MMG, and indeed across the model examples mentioned in MMG, are:

Given the touchpoints that the governance requirement has with each and every stage of the model lifecycle (highlighted in both MMS and MMG), we feel that the role of the Model Oversight Committee is key to successfully implementing the Model Management Framework. Its members are likely to need in-depth knowledge and experience of a wide range of models and banking applications. A seat on the committee is a crucial role within the institution and requires significant time commitment.

A key challenge in the development of the Model Management Framework and developments within the banking industry generated through the expanded use of models is the interconnected nature of those models that now require developers, validators and those responsible for oversight to have a broader range of modelling risks and to be much more holistic in their approach to the construction of business solutions.

For example, a credit risk modeller now needs to consider macro-economic effects to a much greater degree than in the past. The key challenge and risk to banking institutions across the UAE and indeed globally will be to attract the skills (or appointing appropriate third parties) necessary (and in appropriate number, given the need for development, independent validation and governance) to manage their models and the associated model (as required by MMS and MMG).

Before we delve into the details of MMG, it is worth highlighting what the regulator expectations are and the typical challenges some banks may face. We will cover the mitigation of these challenges in our upcoming webinar on this topic.

Expectation of the CBUAE

All institutions are expected to identify gaps between their practice and the MMS and MMG and, if necessary, establish a remediation plan to reach compliance.

The outcome of this self-assessment and the plan to meet the requirements of the MMS and the MMG must be submitted to the CBUAE no later than 6 months (June 2023) from the effective date of the MMS

Banks must demonstrate continuous improvements towards meeting these requirements within a reasonable timeframe depending on the complexity and the systemic risk of each institution. This timeframe will be approved by the CBUAE following the review of the self-assessment. The remediation plan and the associated timing must be detailed, transparent, and justified. The plan must address each gap at a suitable level of granularity.

Potential consequences of non-compliance

In the event that an institution (regardless of its size) is unable to comply with the MMS and the MMG, it must implement a remedial process. This may involve reducing the number and/or complexity of its models in order to improve the quality of the remaining models. Subsequently, the institution could increase the number of models and/or their complexity while maintaining their quality.

Full compliance is expected from institutions with respect to the general principles described in Part I and Part II of the MMS. For the MMG, whilst alternative approaches can be considered, the focus is on the rationale and the thought process behind modelling choices. Institutions should avoid material inconsistencies, cherry-picking, reverse-engineering and positive bias, i.e. modelling approaches that deliberately favour a desired outcome. Evidence of an institution defying the general principles or abusing the MMS in this way will warrant a supervisory response ranging from in-depth scrutiny to formal enforcement action.

Institutions that repeatedly fall short of the requirements and/or do not demonstrate continuous improvements will face greater scrutiny and could be subject to formal enforcement action by the CBUAE. In particular, continuously and structurally deficient models must be replaced and should no longer be used for decision making and reporting.

Typical challenges

Based on our interaction with the market, firms are asking questions of the following type as they begin their journey towards compliance with the MMS and MMG.

The following models are covered in MMG:

1. Ratings Models

Key Challenges

The rating models are often the base of many credit risk applications (such as risk management, provisions, pricing, collections, capital allocation and IFRS9) and cover retail and corporate risk assessment models. Therefore, a poorly developed or managed model will have effects that propagate across many decision areas of the bank.

The development of such models is a well-documented path therefore the MMG only suggests the following minimum requirements:

Governance & Strategy – The management of the bank’s ratings models need to follow the model lifecycle determined in the MMS with models ideally based on internally collected and stored historical data & utilise justifiable development, validation and monitoring methodologies.

Data Collection & Analysis – It is encouraged that the bank collects their own Ratings Model development data (with the collection, cleansing and manipulation processes fully documented and approved). Data utilised for modelling should ideally be at obligor and facility level and have sufficient volume to be statistically valid. Low default volume techniques can be employed, where necessary, but should be fully justified.

Segmentation – Portfolio segmentation needs to contain statistically homogeneous groups of obligors, whilst being heterogeneous to other neighbouring segments and generally are split by product, customer type and difference in historic default or credit performance.

Default Definition – Banks should develop and document two default definitions, an operational definition used for business strategy decisions and a second one used for the estimation and calibration of default probabilities (used within regulatory environment). Whereas these definitions can be the same, the operational definition is usually tighter than the regulatory scenario (e.g. 60 dpd as opposed to 90 dpd). Appropriate levels of conservatism should be built into the definitions.

Default Estimation – Prior to modelling a detailed understanding of the portfolios historic default performance is required. The historic analysis that feeds into the understanding should cover a full economic cycle.

Rating Scales -The Ratings Scales help banks to map and understand the risks associated to individual portfolios across the diverse range of products and segments employed by the bank. The overall scale should ensure appropriate levels of granularity whilst enabling robust estimates of PD within each scale grade. External rating can be used as a benchmark against which the internal grades can be compared.

Model Construction and Use – Whereas Retail models utilise standardised development methodologies, corporate models may require more bespoke methods, due to portfolio complexity and (low) volumes. Models can utilise both quantitative and qualitative characteristics and have the appropriate levels of statistical analysis (where possible). All methodologies need to be comprehensively documented and justified against any identified alternative approaches, independently validated and approved by the relevant committee.

Overrides – Ratings overrides are permitted (up or down) but must be documented with clear reference to the credit approval & governance structures of the bank.

Monitoring and Validation – Monitoring of the models and grades needs to be carried out on a regular basis with independent validation carried out to assess the continued validity of the modelling assumptions, the characteristics within the model and the data used for development and monitoring. Like the development, the validation needs to be approved by the appropriate oversight committees.

2. PD Models

Key Challenges

TTC models require an estimate that covers a full economic cycle, which may be lengthy, particularly in resource-led economies, and many institutions tend not to hold data across such long time periods.

The PiT model needs to estimate at what point in the cycle the model construction takes place, which may be difficult to estimate (particularly if the time between peak and trough is long) making the use of scalars difficult.

The TTC and PiT issues would apply to both PD and LGD.

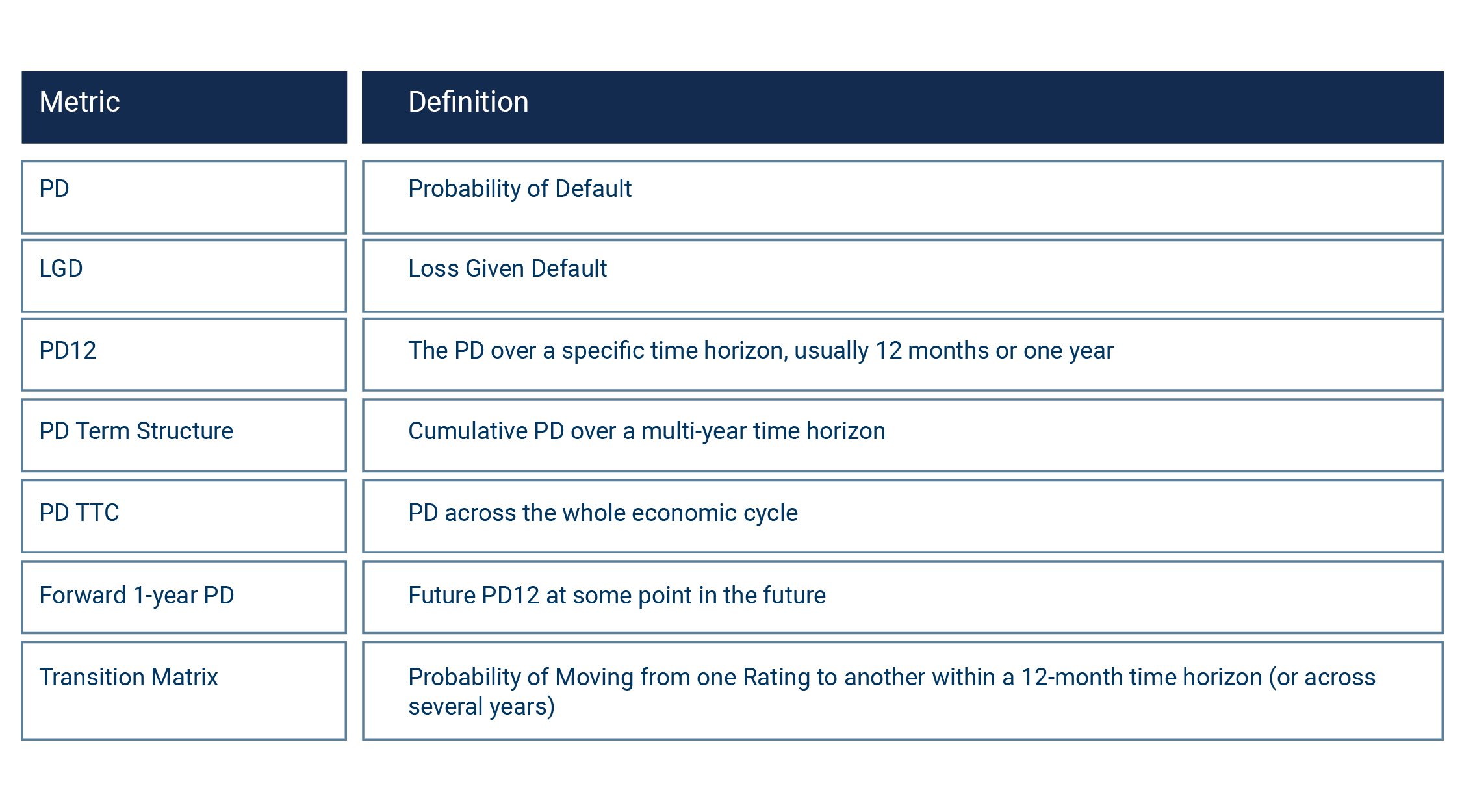

The following table gives a range of definitions that will be used in the PD section:

Default Rate Time Series – Similar to rating models sufficient default history must be used to develop the PD models, with stable and homogeneous PDs across the time horizons (a minimum of 5 years should be used) approved by the model oversight committee.

Ratings to PD – A common technique for PD estimation is the mapping of the Ratings Grades to the Through the Cycle (TTC) PDs. However, the sensitivity of the PDs and grades to the economic cycle need to be considered, indicating that Point in Time (PiT) models are developed and then calibrated to TTC. The calibration needs to use a minimum of 5 years data for wholesale portfolios.

PiT PD and Term Structures – Modelling decisions around PiT PD and Term Structures will have a material impact on the provisions and associated management actions with methodology decisions being based on desired granularity, time steps used and segmentation employed. From a MMG perspective one of the following approaches should be used, Transition Matrices, Portfolio Averaging / Scaling or the Vasicek framework.

PiT PD with Transition Matrices – Transition Matrices are a convenient tool with limitations but practical advantages. The modelling approach incorporates credit indices to map TTC to the PiT volatility of the economic cycle and is built into the transitions within the matrix. The matrices need to be robust and forward looking.

Portfolio Scaling Approaches – A scaling approach is simpler than the transition matrices in that averages are modelled as opposed to the dynamic nature of the transitions and tend to favour smaller segments. However, the drawback of the approach is that volatility of the PD is suppressed and results in underestimation.

Vasicek Credit Frameworks - The Vasicek framework is often used to model PiT PD term structures, however the institution should be aware of the material challenges of the method in that it was designed to model economic capital and extreme portfolio losses and the parameters that are challenging to calibrate (in particular correlation of asset value and risk factors and their interaction with macroeconomic factors). The choice of methodology needs to be agreed by the Model Oversight Committee.

Validation of PD Models - Regardless of the chosen methodology the PDs should be validated according to the MMS principles, with both qualitative and quantitative assessments, ensuring that a range of PD metrics at a low level of granularity. Comprehensive validation reports should be produced that address specific features of the models, compare results across several development methodologies, deal with low default portfolios by looking at difference between 1 year PDs and TTC PDs, Cumulative Default Rates etc.. Other factors such as the Central Tendency, Back-testing, Benchmarks etc. should be part of the validation report. All PDs should be economically consistent.

3. LGD Models

Key Challenges

Collections and recoveries processes are continuously improving therefore a consistent picture of the data is very difficult to achieve, making the modelling very dependent on a range of assumptions to sample the data into a representative (of the portfolio going forward) form. This may add to the modelling complexity and therefore increase model risk.

Data Collection – Robust data collection of loss and recovery information needs to be detailed within the Data Management Framework and include obligor & facility characteristics, recovery cash flows, collaterals and asset values over time.

Historical Realised LGD – The computation of realised LGD needs to be carried out so that workout period can link cashflows and costs to specific default events and include the LGD at the default as a percentage of the default exposure and cashflows discount to the default event. Institutions also need clear processes and assumptions for dealing with unresolved cases.

Analysis of Realised LGD – Once the realised loss data has been extracted it should be analysed to determine the key drivers of loss and inform the choice of modelling methodology. At a minimum it should be understood when the losses occurred within the economic cycle, the creditworthiness of the obligor at the time of the default, the facility and other factors. Three versions of the LGD (downturn, growth and long run average) then need to be calibrated to the TTC LGD and PiT LGD.

TTC & PiT LGD – The TTC LGD measures the LGD independent of the economic, whereas as PiT incorporates the economy into the models. Each model needs to have appropriate risk drivers that relate characteristics of the loan to its loss and recovery profiles. For PiT models the LGD tends to be higher during recessionary times which is difficult to assess within natural resource (e.g. oil in the UAE) dependent economies but it is assumed this holds.

Validation of LGD – The construction of the LGD models should follow the lifecycle stages detailed in the MMS, including the need to independently validate the models, both quantitatively and qualitatively, at development and at 2 regular intervals thereafter. The validation scope covers data quality, definition of default, loss and recovery, methodologies employed for TTC and PiT etc.

4. Macro Economic Models

Key Challenges

Macro modelling in the credit risk arena is a relatively new discipline therefore it can be difficult to assess the range of model alternatives effectively. Expected relationships between the banking metrics and the associated macroeconomic variables is often unknown potentially risking the wrong or incorrect variables being included in a model alternative. Modellers need to discuss and agree upon that observed trends are intuitive and in line with business and economic expectations.

Time Series Regression Model Scope – The arenas of IFRS9 and Stress Testing are the main uses of macroeconomic modelling where UAE financial institutions predicts PD, Credit Indices and LGD. Given the nature and complexity of the models, statistical techniques are often combined with judgemental approaches where key modelling choices are discussed and agreed by model oversight.

Data Collection – Ideally the macroeconomic data cover an entire economic cycle, but as a minimum should cover at least 5 years. Fields that need to be collected include GDP, oil prices, house prices etc. from several independent sources. The CBUAE can supply the data on an interim basis.

Analysis of Dependent Variable – Default time series should be representative of the current portfolio with descriptive statistics and expert judgement used to determine data suitability for modelling.

Variable Transformation - Variable transformations will have an effect on the macro models as well as ECL (Expected Credit Loss), therefore any derivations should be tested and documented and applied to both macro and dependent variables. Transformations include changes for stationarity, lags and smoothing.

Correlation - The purpose of correlation analysis is to determine the strength of the relationship between the dependent variables, e.g. PD, and the macro variables, and to see whether the relationships show causal effects and make economic sense. A correlation cut-off should be implemented to select macro variables for modelling.

Model Construction - The aim of the macro modelling is to build relevant and robust relationship between the dependent variable and several macro variables (the choice of variables is dependent upon the correlations) using an appropriate methodology to perform the multivariate regression analysis. Performance of the models needs to be assessed using a range of metrics, but also business / modeller judgement.

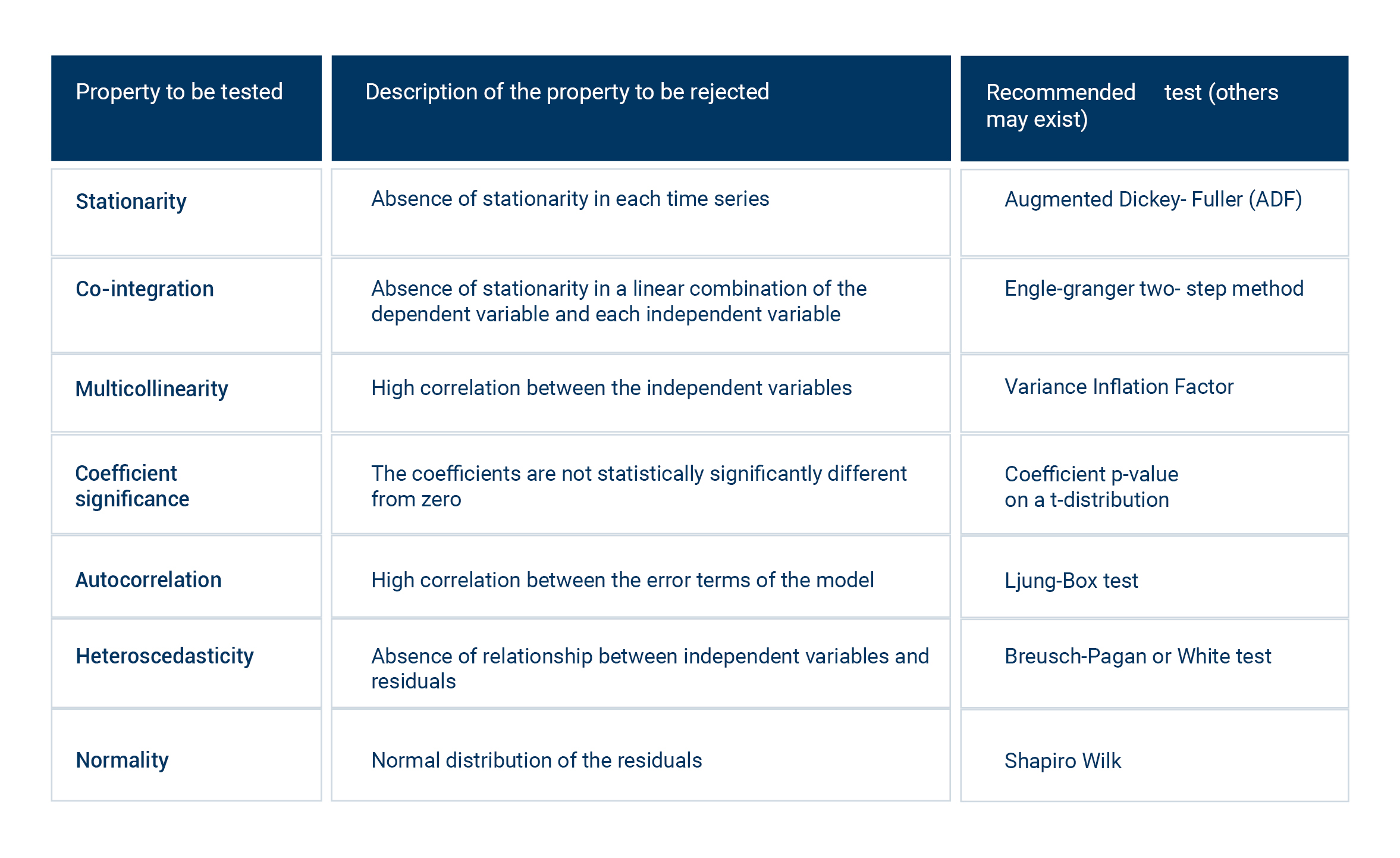

Statistical Tests

The following tests should be used across the macro modelling arena:

Model Selection - Model and macro variable selection should be based on clearly defined performance criteria, so that output is consistent with historic experience and produce accurate predictions. The factors used in model selection from the pool of available models cover statistical performance (the model should be robust and stable on the development, hold-out and out-of-time samples)., model sensitivity, intuitive from a business perspective, have realistic outcomes and be implementable. Finally, to test intuitiveness the model should be tested under downturn scenarios. Model forecast uncertainty needs to be estimated, documented and reported to the Model Oversight Committee.

Validation of the model should be performed an independent party, separate from the development team, following the MMS principles. As the volume of macro data is low, monitoring of macro models can be done less frequently than other types of models, annually at a minimum.

Scenario Forecasting – A key requirement of the IFRS9 is for the metrics to be forward looking therefore the macroeconomic models need to include macro variables that will be available going forward. Three scenarios need to be included, baseline, upside and downside.

5. Interest Rate Risk in the Banking Book (IRRBB) Models

Key Challenges

The scope of the section covers both conventional and Islamic products, with models addressing previously issued regulation that look at expected earning and the value of the balance sheet. The IRRBB model requirements relate to governance, management, hedging and reporting.

Metrics - All interest sensitive positions should be identified and reconciled against the general ledger with variation in expected interest earning captured by several metrics including gap risk (difference between future cash inflow and outflows), gap risk duration, economic value of equity and net interest income (net profit for Islamic products)

Modelling - Models to predict the interest rate risk need to follow the MMS guidelines and model lifecycle with modelling assumptions are not preserve of only the ALM or market risk function but need to be agreed by the Model Oversight committee with the modelling complexity determined by the size and sophistication of the institution. The model requirements aim to ensure the modeller looks at computation granularity, time buckets, option risk, commercial margins, basis risk, currency risks, scenarios and IT Systems.

Interest Rate Scenario - Institutions should compute Delta EVE and Delta NII under 6 interest rate scenarios to account for interest rate shocks, these include parallel up and down shocks, a steeper shock (where short-term rates are down & long-term rates are up), flattener (short up, long down), short rate up-shock, short rate down-shock. The choice of shock should be supported by appropriate governance. The consideration of negative interest rate potential should be incorporated into the estimates

Validation of EVE & NII - All EVE and NII models in the IRRBB framework should be independently validated as per the MMS and based on the principles of both deterministic and statistical models ensuring that the assumptions and decisions are justified. The validator should consider the mechanistic construction, the financial input flows correctly, the models are coherent and that the behavioural patterns are correctly incorporated. Finally, the validation should support the robust decisioning and management of interest rate risk.

Option Risk - Option Risk is a fundamental building block of IRRBB models as it looks at potential changes in the future flow between assets and liabilities (where the options can be either implicit or explicit). To model the option risk the modeller needs to consider identifying material products, ensure assumptions are justified by historical data, understand sensitivity, and fully document the process and incorporate at a granular level (if it is a large complex institution).

6. Net Present Value (‘NPV’) Models

Key Challenges & Scope

The concept of Net Present Value is used to estimate various metrics within financial accounting, risk management and business decisions around asset valuations, investment value, collateral valuations and financial modelling to estimate cost. From an MMG perspective NPV comes into ECL, LGD and CVA models.

Governance - Standalone NPV models are included in the model inventory, subjected to the model lifecycle management discussed in MMS, and approved by the Model Oversight Committee. However, as deterministic model they are generally not recalibrated but the assumptions and consistent methodologies around the inputs should be reviewed and validated.

Methodology - The modelling of NPV is split into two parts, mathematical mechanistic considerations (well documented in accounting rulebooks) and the choice of inputs (where institutions have a degree of autotomy).

Documentation - Standalone NPV Models need to be fully documented addressing the methodology, assumptions, inputs etc. with a dedicated document for each material valuation exercise that also details the business rationale and the prevailing economic climate.

Validation - As the NPV models are considered within the remit of the MMS a full independent validation of the methodology used, assumptions made, and inputs considered needs to be carried out on a regular basis. Particular attention needs to be paid to the application of credit premiums due to the degradation of creditworthiness when restructuring occurs.

Don't miss this roundup of our newest and most distinctive insights

Subscribe to our insights to get them delivered directly to your inbox