As data revolution gathers pace. banking institutions around the world are utilizing an ever-increasing number of data-driven models within an ever-expanding range of business and strategic decisions. The risk associated with using a poorly constructed or badly implemented model has also increased to a point where regulators around the globe have become increasingly concerned that the increased exposure to model risk will lead to banking losses or insolvency, which in turn could potentially harm the entire financial ecosystem.

Model risk is defined as potential financial loss due to decisions that are based on either a flawed model, or the inappropriate use of a model. A flawed model could be understood as either being poorly constructed (that is, uses incorrect data, inappropriate techniques and/ or utilizes short-cuts, among others) or one that may be incorrectly implemented). Inappropriate use means using a model outside its domain of applicability, for instance. using an IFRS 9 model to calculate capital requirements. Initially/Traditionally. regulators were only concerned with credit risk models, and would insist on a Margin of Conservatism being built into models to cover model risk. However, models are now used everywhere and the exposure to model risk has exploded. Several regulators around the world have issues, or plan to issue, plan to issue model risk guidance in 2022.

The model risk guidance issued by the Central Bank of the United Arab Emirates in the summer of 2022 has two goals: to reduce the model risk across the firms that it regulates and to achieve consistency in standards and practices in this area. Two documents have been issued: a) The Model Management Standards (MMS) and b) the Model Management Guidelines (MMG). They apply to all licensed banks and finance companies in the UAE. irrespective of size or sophistication. The MMS essentially provides standards across the model lifecycle (build, validate, and monitor. among others), and hence applies' to all models. On the other hand, the MMG covers specified model types (scorecards. PD, and NPV, among others).

From an implementation perspective the CBUAE expects its regulated community to identify the gaps to MMS and develop a remediation plan within + months of the guidelines being finalised and issued. The clock is licking or will be soon.

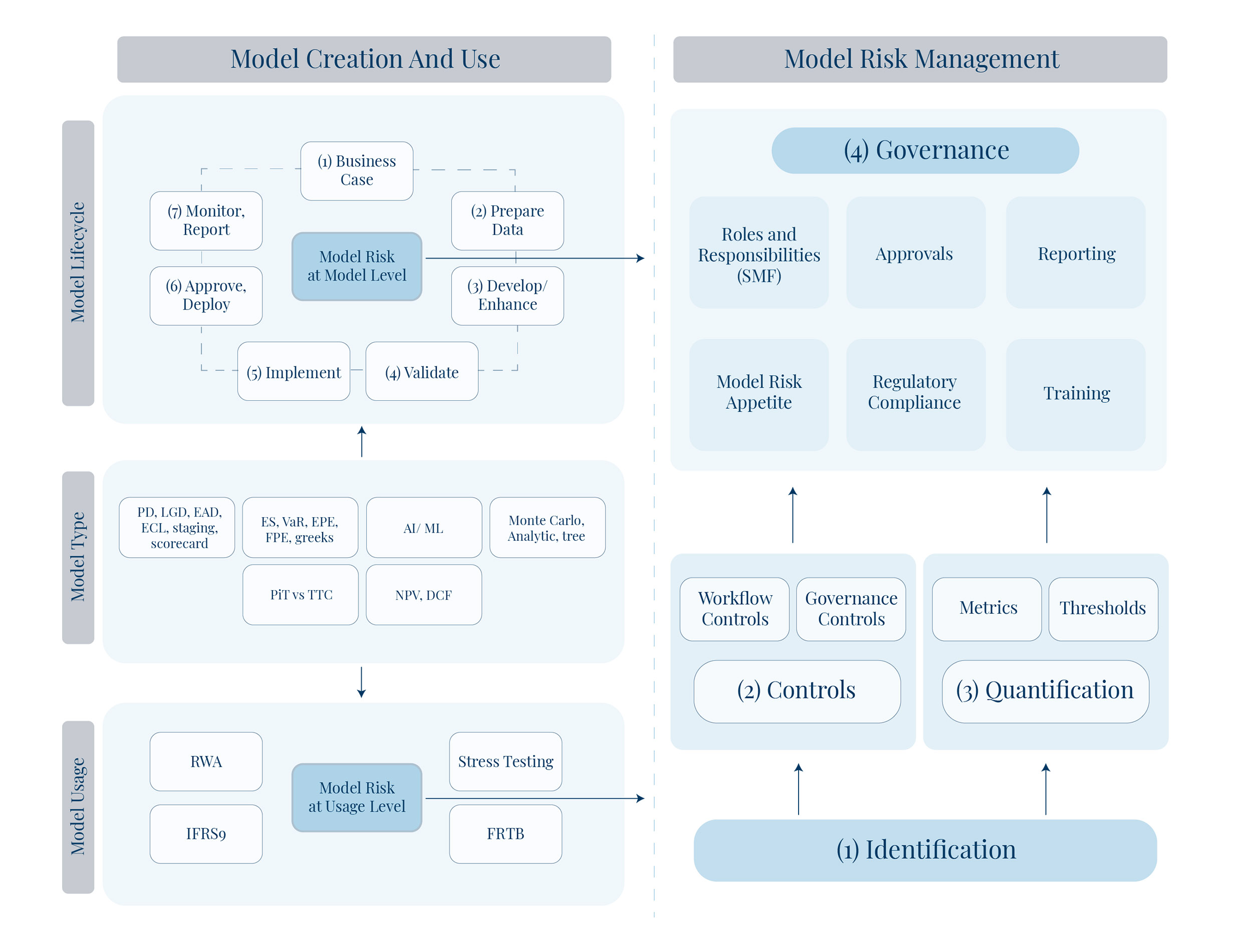

The essence of Model Risk Management can be stated simply and is summarised in the framework diagram below:

The MMS covers the model lifecycle and model risk management which is the top-left and right-side of the figure). The MMG. covers guidance on best practices for different types of models as they move through their lifecycle. To reiterate, the MMS and MMG are guidelines around best practice and are not intended to be step-by-step 'how to' the handbooks (which would require several hundred pages). The MMS is structured in two parts. namely: general principles and specific guidelines on the application of those principles.

The following section briefly outlines the principles around the MMS. with specific details summarised within the subsequent sections. Following the MMS. the points are covered by lifecycle stages.

02. Data Management Should Include:

06. Model Performance Monitoring Should:

07. Independent Validation:

Institutions are expected to develop and maintain a set of policies and procedures that supports their internal models, whilst recognising that there may be gaps between the written policy and its practical implementation. The governance structure must embrace eight key concepts: model objectives. lifecycle, inventory, ownership, key stakeholder, relation with 3rd parties, internal skills, and documentation.

Models must have a clear objective agreed amongst all stakehokders. thoroughly documented and in fine with an agreed strategic goal. The strategy must define the expected contribution of gd party consultants around development, management and validation of the models with the institutions retaining control of the models.

Institutions must manage each stage of the lifecycle, including development, pre-implementation validation, implementation, usage and monitoring, independent validation, and changes (recalibration or redevelopment).

All models need to be tracked through a comprehensive Model Inventory that covers model identification number (a recalibrated model is considered a new model), present and past usage, grouped by model risk or tier and model status (i.e., what stage of the life cycle the model is at).

The concept of model ownership is fundamental to model management and to the MMS with each model requiring an assigned owner that is accountable for all decisions affecting that model as it passes through the lifecycle (risk models cannot be owned by the business).

Modelling decisions must have a clear governance process, with all decisions clearly documented. The parties involved in the decisions need to include model owner, developer, validator, user, data owner, and Model Oversight Committee.

'The Model Oversight Committee must be separate from other existing risk committees and must meet at least quarterly, providing value-addition at each stage of the model lifecycle (particularly where strategic modelling decisions are-made), with each memher of the committee having sufficient understanding to be able to make informed technical decisions.

Where third parties are involved, the institution remains solely responsible for all key modelling decisions (although these may be informed by the third party) and are always the responsible model owners.

Dedicated documentation must be produced for each step of the of the model lifecyele. The documentation must be sufficiently comprehensive to ensure that any dependent party has all the necessary information to sess the suitability of the modelling decisions.

Instiutions need to develop and retain internal modelling skills (this needs to be a key strategic focus of the institution) in line with the complexity of the models employed by the institution (if the skills are not commensurate with the complexity of the models, then the models should be simplified). Knowledge of the models should not be concentrated to a small number of key staff.

If an institution merges or acquires another institution, it must re-visit all the elements of the model management framework, as part of the integration process.

Accurate and representative historic data is the backbone of financial modelling. Institutions should have a robust Data Management Framework (DMF) to be able to build accurate models. The DMF has several core principles. such as covering the scope and complexity of all the institution's models.

The key principles of the DMF are highlighted below:

Identification of Data Source: The DMF must include processes to identify and seleet relevant (to the organisations' business case) and representative data. either internal or external (if internal data is not available). Once the data source has been selected, it should be retained long enough for predictive time series to be established.

Data Collection: Data must be collected that is relevant to the institution's portfolio experience and sufficiently granular to allow modelling, i.e.. it is specific enough to determine the risks associated with the portfolio and collected often enoug|Jo allow the construction of historical time series covering a range of risks faced by the institution and arranged to allow analvsis of the appropriate drivers of risk. Data collection processes should be automated and needs to be comprehensively documented.

System Infrastructure: The institution must have appropriate strategic infrastructure that is scalable to support the Data Management Framework and should not be spreadsheet-based.

Data Storage: Once the data has been quality-reviewed and is ready to be used for development or validation, it should be stored in a central location. Final data sets cannot be stored on the computers of individual employees. The access of a final data set must be controlled and restricted to avoid unwarranted modifications.

Data Quality Review: The data quality review investigates whether the data is complete, accurate, consistent, timely and traceable.

Steps in Model Development must follow a structured approach and be fully documented and approved by the Model Oversight Committee. At a minimum, this covers. data preparation, investigation, characteristic creation, sampling. methodology, model build & calibration, and validation.

For the purpose of risk models, a certain degree of conservatism should be incorporated in each of the steps above to compensate for uncertainties.

The Data Preparation and Exploration stage states that the data used for modelling should be representative (especially for the time period. type of borrower and segment perspective) and that any manipulation or transformation undertaken does not change the representative nature of the raw data.

Data Transformations should be carried out to maximise the predictiveness of the chosen variables and used with a clear purpose.

If Sampling (statistical models) is used. the modelling team should ensure that the chosen samples (across time periods and segments) are representative of the target variable being modelled with the sampling methodology being documented.

The Choice of Modelling Methodology used must be conscious, rigorous, documented and appropriate to the effect being modelled. If suggested by a third party, the choice of methodology should be owned by the institution, with the alternatives considered, appropriately documented and approved by the Model Oversight Committee.

The Model Construction of statistical models needs to be based on established industry techniques to reach a robust assessment of the effect being modelled that demonstrates an understanding of the characteristies and their relationships between the dependent and independent variables.

Deterministic models, such as financial forecasting models or valuation models. do not have statistical confidence intervals. Instead. the quality of their construction should be tested through:

a) a set of internal consistency and logical checks; and,

b) comparison of the model outputs against analytically derived values.

Expert-based Models also need to be managed as part of the model lifecycle and have a structured development process and detailed documentation to allow independent validation. The subjective inputs into the model need to form part of the future data collection plan of the institution, as documented in the DMF: The decision to use an expert model needs to be justified and approved by the Model Oversight Committee.

Institutions must develop a pool of alternate models and have an explicit mechanism for Model Selection that should include minimum performance threshold, make business sense, are economically intuitive and can be implemented.

Model Calibration is necessary to ensure that models are suitable to support business and risk decisions. Institutions must ensure that model calibration is based on relevant data that represents appropriately the characteristics and the drivers of the portfolio subject to modelling.

The Pre-implementation Validation must cover a subset of the full independent validation that occurs at the end of the cycle. The objective of this step is to ensure that the model is fit for purpose and generates results that meet business sense and economic intuition. At a minimum the validation should cover prediction accuracy, stability. robustness and assess model sensitivity.

Validation results should be documented to include any model limitations, assumptions made etc. The validation report should also include an impact analysis.

Institutions must consider model implementation as a separate phase within the model lifecycle. The model development phase must take into account the potential constraints of model implementation. However, successful model development does not guarantee a successful implementation. Consequently. the implementation phase must have its own set of principles.

'The implementation of a model must be treated as a project with a clear governance, planning. funding and timing. The implementation project must be fully documented and, at a minimum. should include the following components: scope, plan, roles and responsibilities, roll-back plan and User Acceptance Testing with test cases.

The IT system infrastructure must be designed to cope with the demand of the model sophistication and the volume of regular production. Institutions must assess that demand during the planning phase. The IT system infrastructure should include, at a minimum, three environments: development. testing. and production. Institutions must ensure that a User Acceptance Testing (UAT) phase is performed as part of the system implementation plan. The objective of this phase is to make certain that the models are suitably implemented according to the Business Specifications document. The model implementation team must define a test plan and test cases to assess the full scope of the system functionalities. both from a technical perspective and modelling perspective. There should be at least 2 rounds of UAT to guarantee the correct implementation of the model.

Institutions must ensure that a production testing phase is performed as part of the system implementation plan. In particular, the production testing phase must verify that systems can cope with the volume of data in production and can run within an appropriate execution time.

The use of spreadsheet tools for the regular usage of models and the production of metrics used for decision-making is not recommended. More robust systems are preferred. Nevertheless, if spreadsheets are the only possible modelling environment available initially, certain minimum quality standards apply.

Model Usage is independent of model quality. Misused models will produce counter-intuitive results. From a usage perspective the model strategy must include how the models will be used, who uses them, how often they are used, what the inputs and outputs are, any limitations concerning the outputs and the governance process around overrides.

Usage Responsibilities are shared across several parties but are ultimately approved by the Model Oversight Committee.

Monitoring of model use is performed by a number of teams. including the model owner, validator, and monitoring team, among others. The validation of usage also needs to be independent, with. Model Owners responsible for reporting misuse to the Model Oversight Committee.

Manual overrides of model outputs are possible and permitted, but within limits. Institutions must establish robust governance to manage output overrides. Model at the appropriate authority-level. They must be the subject of discussions between the development team, the validation team and the model user. If a model has been approved and deemed suitable for production, its outputs cannot be ignored. Model override must be analysed, tracked and reported to the Model Oversight Committee as part of the monitoring and validation processes. Models whose outputs are frequently and materially overridden must not be considered fit for purpose and should be recalibrated or replaced.

Institutions must pay particular attention to the usage of rating models due to their material impacts on financial reporting. provisions. risk decisions and business decisions. At a minimum, a rating should be assigned to each obligor on a yearly cycle.

The process for Model Performance Monitoring must be performed on a regular basis with the analvsis consistent with model use. A robust model does not guarantee relevant use, but inappropriate use may affect model performance. The aim of the monitoring is to ensure that the model consistently performs in changing economic and market conditions. Performance modelling should use model-appropriate metrics and support decisions around continued use or redevelopment. Responsibility for monitoring should be clearly defined and could be carried out by developers, validators or external teams. Monitoring reports for all models need to be regularly presented to the Model Oversight Committee, where any threshold breaches should be discussed.

Frequency of model monitoring is determined in the model lifecycle and should be appropriate to the model use (more frequent than independent validations) with metrics that are appropriate to that model type/use but should assess accuracy. stability and use.

Monitoring reports need to be circulated to relevant teams, including the developers and validators as soon as they are produced. Any significant breaches of thresholds (that are model / use specific) must be reported to the Model Oversight Committee (who will decide whether to suspend the model's use or accelerate the model review process/redevelopment schedule).

Independent Validation of the models and monitoring reports (among others) is an integral part of the model lifecycle process and aims to offer a comprehensive and separate review of the model development and use environment. Therefore, a key input into the process is the monitoring report. All models regardless of development methodology or source require independent oversight.

Validation needs to be both quantitative and qualitative, as each views the model from a different perspective.

If there is insufficient data for a quantitative review. then this needs to be flagged to the Model Oversight Committee (to make an appropriate decision on model use), a qualitative-only approach is not sufficient.

The scope must be comprehensive, and should clearly state and include all model features necessary to assess model performance. The exercise must result in an independent judgement of model suitability and must not solely related to features and performance.

Any uncovered issues need to be graded by severity (with this feeding into how the issue is resolved). The Validation Process should also estimate the model risk associated with use of the model (which would be a combination of the uncertainty and materiality).

From a responsibility perspective, institutions must ensure that the process is completely independent of development and monitoring (internal or external teams can be used). The validation team must contain the same skillsets as the development team (to adequately assess the appropriateness of the development methodology.) A key function of the leam is the ability to hold, express and defend model opinions that differ from that of the development team that is potentially supported by external research of modelling alternatives.

Internal Audit is ultimately responsible to ensure that the validation is correctly undertaken.

Qualitative Validation covers the governance, conceptual soundness, purpose of the models, the methodology used and the suitability of inputs / outputs.

For statistical models the qualitative validation also needs to review the choice of model inputs and the sampling technique used.

Quantitatively the validation needs to match the monitoring reports (which are an integral part of validation). The quantitative: review also needs to assess model accuracy and conservatism, stability and robustness and sensitivity of the models to input changes.

The review must also rely on numerical analysis to reach its conclusions, which may include partial or complete replication of the models. As a minimum the quantitative validation needs to cover implementation, adjustments and scaling, hard coded rules, extrapolation and interpolation, industry benchmarks, sensitivities to input changes.

Validation must be conducted at a frequencv, which must be appropriate to model use and type with the CBUAE presceibing a timeline for monitoring and validation by model tvpe.

The analvsis done for validation needs to be rigorous and documented in a detailed validation report that is reviewed and agreed on by the Model Oversight Committee. The report must be practical, action-oriented. and focused on findings. Ata minimum it should include details to identify and describe the model being validated, any model issues and recommendations on remediation (with remediation plans based upon the severity of the issue).

The Validation report needs to be discussed between the validators and developers with an aim to agreeing upon an understanding of model weakness and appropriate remediation actions and timelines. The report needs to be presented to the Model Oversight Committee.

Institutions need to address model defects in a timely manner. with high severity issues that need a technical solution being addressed first, regardless of model tier.

An institution may utilise a temporary. fix, such as an overlay whilst remediation is in process. Once issues are raised, their severity must be agreed between the developers and validation team and a maximum remediation period set. If there is a high severity issue unresolved after 6 months, progress needs to be reported to senior management and CBUAE.

Don't miss this roundup of our newest and most distinctive insights

Subscribe to our insights to get them delivered directly to your inbox